The recent launch of the world’s first AI-powered hospital in China has sent shock waves through the medical community.

Named “Agent Hospital” this groundbreaking facility in Beijing features 14 AI doctors and 4 virtual nurses, capable of treating 10,000 patients in just days—a task that would take human doctors up to two years.

This astonishing leap forward highlights both the immense potential and profound challenges posed by artificial intelligence in healthcare.

Artificial intelligence has steadily made inroads into medicine, from diagnostic tools to robotic surgery. But the recent development in China represents an entirely new scale of AI integration in patient care. As anaesthesiologists and intensivist, we now find ourselves at the forefront of a technological revolution that is rapidly transforming our field. The pace of innovation in AI and automation is outstripping our ability as humans to fully comprehend and adapt to these changes. While increased efficiency and reduced workloads may seem like clear benefits, we must step back and carefully consider the broader implications.

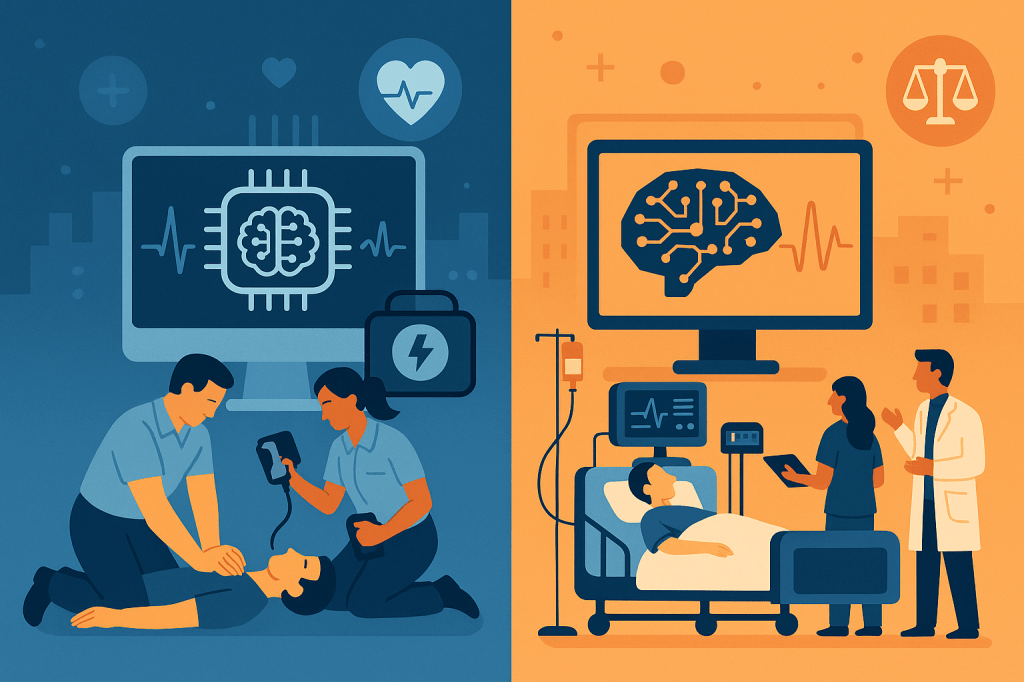

Innovation driven solely by metrics like patient throughput or cost reduction risks losing sight of our fundamental mission—to provide compassionate, patient-centered care that improves overall well-being and quality of life. As Montomoli et al. argue in their recent paper, it is essential to examine the ethical dimensions of AI in critical care settings. These technologies, powerful as they are, can unintentionally reshape the priorities of healthcare systems, placing efficiency ahead of more nuanced considerations like human dignity, informed consent, and quality of life.

One of the most pressing concerns is the potential misalignment of interests between key stakeholders—patients, doctors, hospital administrators, and technology companies. Patients may have differing views on quality of life, especially when it comes to invasive interventions or end-of-life care. Doctors may disagree on the best clinical approach, while hospital administrators might be tempted to implement AI systems based on their potential to reduce costs. And the priorities of technology companies, often driven by commercial interests, may not always align with those of healthcare professionals.

Patient autonomy could also be at risk. Algorithmic systems optimised for speed and cost-efficiency might prioritise standardised care over individualised treatment plans, potentially undermining the trust that is foundational to the doctor-patient relationship. Patients need to feel that their healthcare decisions are being made with their unique circumstances in mind—not just based on what an algorithm predicts as the most efficient course of action.

As our group has already pointed out, the ethical integration of AI requires transparency and a clear understanding of how these systems arrive at their decisions. The use of AI in healthcare should not become a black box, where decisions are made by inscrutable algorithms without a human in the loop. We must ensure that AI systems are held to the highest standards of accountability and that their role in care is clearly communicated to both clinicians and patients.

This situation is further complicated by our own cognitive biases. As Kahneman’s work on cognitive psychology demonstrates, humans are prone to biases like availability bias—prioritising recent, easily recalled information—and present bias, which favours short-term gains over long-term consequences. These cognitive shortcuts, while useful in survival situations, can lead us to focus on the immediate benefits of AI, such as increased throughput, while ignoring the potential for unintended, long-term consequences for patient care and medical ethics.

The integration of AI into anaesthesia and critical care presents a profound responsibility. We cannot passively allow these technologies to reshape our profession and the patient experience. Instead, we must actively guide how AI and automation are integrated into our practice. This requires us to slow down, engage in thorough ethical deliberation, and ensure that the primary goal remains the long-term improvement of human well-being rather than short-term efficiency gains.

The AI revolution in healthcare is here, whether we are ready or not. However, by approaching these innovations thoughtfully and responsibly, we can harness their potential while staying true to the core values of our profession. I urge my colleagues in anesthesia and intensive care to engage in the conversation, participate in shaping guidelines, and ensure that the future of medicine continues to prioritize patients’ autonomy, dignity, and well-being above all else.

The future of anesthesiology and critical care is in our hands—let’s make sure it’s one that puts patients first.

Leave a comment